How we're using GitHub Actions to transform our error triaging workflow

One of our favorite things at DNSimple is automation, which includes automating our own workflows. It's a huge time-saver and allows us the space to focus on other, more important things - like building the best DNS management around.

With this post, we're sharing some insight into how we're using GitHub Actions to transform our error triaging workflow. Though this triage workflow has traditionally been a manual process, we've recently started automating it. The human component hasn't fully been removed, but we've been able to reduce it substantially.

Our development triage workflow

When unexpected errors happen in our applications, we use Bugsnag to collect the logs and inform us of the occurrence. Then, a new GitHub issue is automatically created and linked for us. We regularly review these new errors and methodically triage them to identify the cause. Finally, we use these issues to categorize, prioritize, organize, and assign our development work.

We perform several Actions on each GitHub issue that arises:

- Determine the type of error.

- Update the title to be more useful and human-readable.

- Add the correct labels, such as

billingorinterface. - Include relevant logs.

- Link to duplicate errors.

- Investigate the cause.

GitHub Actions

When we started this project, GitHub Actions had recently been released - it had great potential for listening to events related to GitHub issues. After spending some time in the documentation, we realized that workflows are made up of Actions. These Actions can be re-usable chunks of code which can send input data to later Actions. This was an exact representation of our current manual triage process:

To start things off, we focused on creating an Action that read the content of an issue and labeled it accordingly, based on the body of the issue. Because this issue-labeler did not rely on any third-party interactions, it was a simple place to begin.

First, we needed to identify which labels need to be applied to a given issue. For this, we created a config.yml file that maps labels to regular expressions. Because the issue's content contains a stack trace, we know that errors in certain files should have certain labels:

admin:

- /app\/\w+\/admin/

- /lib\/\w+_admin.rb/

certificates:

- /app\/\w+\/certificates/

Then, we added create issue-labeler.js with a basic asynchronous run function. GitHub Actions will await the resulting function:

const run = async () => {

const configPath = core.getInput('configuration-path', { required: true }) // set by GH inputs

const labelMap = await loadConfig(configPath)

core.info('Loaded labels', JSON.stringify(labelMap))

}

Next, we added a function to take our labelMap and return the appropriate labels for a given content string:

const determineLabels = (content, labelMap) => {

return Object.keys(labelMap).filter((label) => {

return labelMap[label].some((regex) => {

return regex.test(content)

})

})

}

Now, we can create use the global github variable to build a GitHub API client and add labels to an issue. As you can see, there are useful items on the github.context object, such as the repo and payload:

const token = core.getInput('repo-token', {required: true}); // set by GH inputs

const client = github.getOctokit(token);

const addLabels = (client, issueNumber, labels) => {

await client.issues.addLabels({

owner: github.context.repo.owner,

repo: github.context.repo.repo,

issue_number: issueNumber,

labels: labels

});

}

With these functions in place, we need to tweak our run function to use them:

const run = async () => {

const configPath = core.getInput('configuration-path', { required: true }) // set by GH inputs

const labelMap = await loadConfig(configPath)

const labels = determineLabels(github.context.payload.issue.body, labelMap)

if (labels.length > 0) {

addLabels(client, github.context.payload.issue.number, labels)

}

}

run()

Finally, if we need these labels to be consumed by another GitHub Action at a later stage in the workflow, we can setOutput:

core.setOutput('labels', labels.join(','))

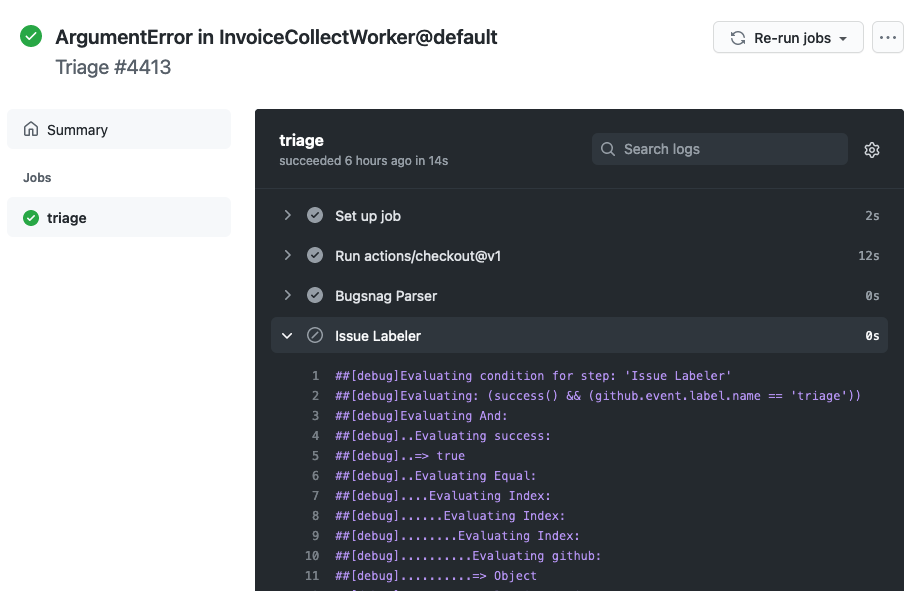

GitHub workflows

The great thing about how GitHub Actions works is that Actions can be reused in multiple workflows. With a simple yaml configuration, we're able to add if requirements to determine when an action should be run. These validations can even use the results of a previous step. For example, here we're only fetching Bugsnag logs if there's a bugsnag label:

name: "Development Triage"

on:

issues:

types: [labeled]

jobs:

triage:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v1

- name: Issue Labeler

uses: ./.github/actions/issue-labeler

if: github.event.label.name == 'triage'

id: labeler

with:

repo-token: ${{ secrets.GITHUB_TOKEN }} # supply input variables

- name: Bugsnag Logs

uses: ./.github/actions/bugsnag-logs

if: contains( # provided by GitHub Actions

steps.labeler.outputs.labels, # reading from the `setOutput()` in our issue-labler Action

'bugsnag'

)

id: bugsnag

What we learned

Outside of unit tests, testing our workflow could really only be done in production. One way we mitigated this risk was by creating a test repository where we could run our Actions without affecting the rest of the team. Another hedge against this is setting up unit tests. GitHub provides great logging tools for continuous tracking of workflows and Actions.

What's next?

Along with the issue-labeler, we've added several other Actions including: fetching and attaching logs from Bugsnag, tracking which issues are duplicates, and renaming issue titles to be more actionable.

We still have parts of our process that consist of "monkey work" and could be improved, like adding errors to Airtable. Additionally, our Actions have been designed to only provide supplementary information, not necessarily take decisive action. Once we're confident that they consistently do the right thing, we can expand them to be more pro-active.

Though it's impossible to completely remove the human element of our triaging process, this project has already paid dividends by allowing our development team to reclaim a lot of time for other work.

Automate your DNS managment

We don't just automate processes to make our work better. We automate to make your domain management easier, so you can reclaim your time, too. If you're ready to experience DNSimple's expert DNS management and domain hosting for yourself, give us a try free for 30 days. Have more questions? Drop us a line - we're always happy to help.

Dallas Read

Dream. Risk. Win. Repeat.

We think domain management should be easy.

That's why we continue building DNSimple.